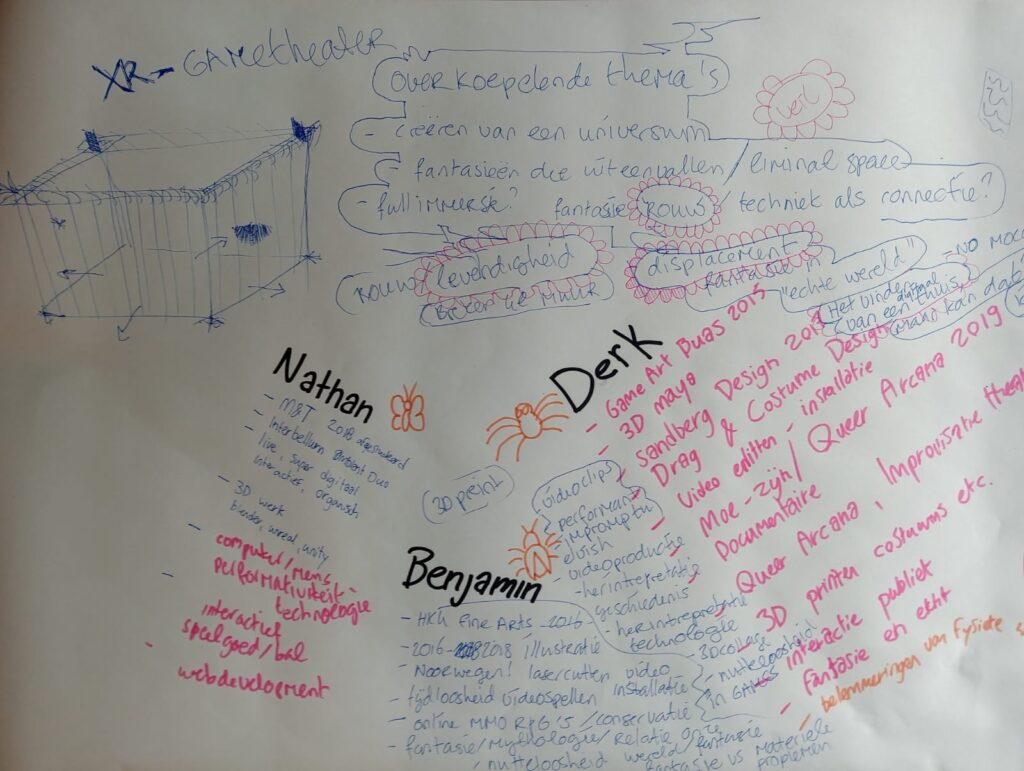

Before the project would start in November 2023, we sat around the drawing board, thinking about what type of interaction and intentionality we were aiming for, for the next 6 months. After sharing our experiences with creative technology productions we came to the conclusion that all members of the team were involved with making fantastical worlds in which the participant could imagine our created space as reality. We started asking the question “why do we as makers so often try to pierce the veil into these fantasy worlds?”

“Can we, as gamers, digital makers, ever truly exist in another world, or do the limits technology, when they become apparent, always eventually pull you back into your own reality?” By thinking about these question, we came upon the concept of some type of “digital homesickness”, where you feel a sadness after being pulled back to your own reality, after having artificially existed in a different dimension.

The team started by writing down all of our individual talents and masteries of tool packages. It became apparent to us that the team has a lot of diverse and overlapping skill sets. We decided to, for now, work in an undefined work structure in which the three autonomous artists would freely collaborate and co-create, based on intuition.

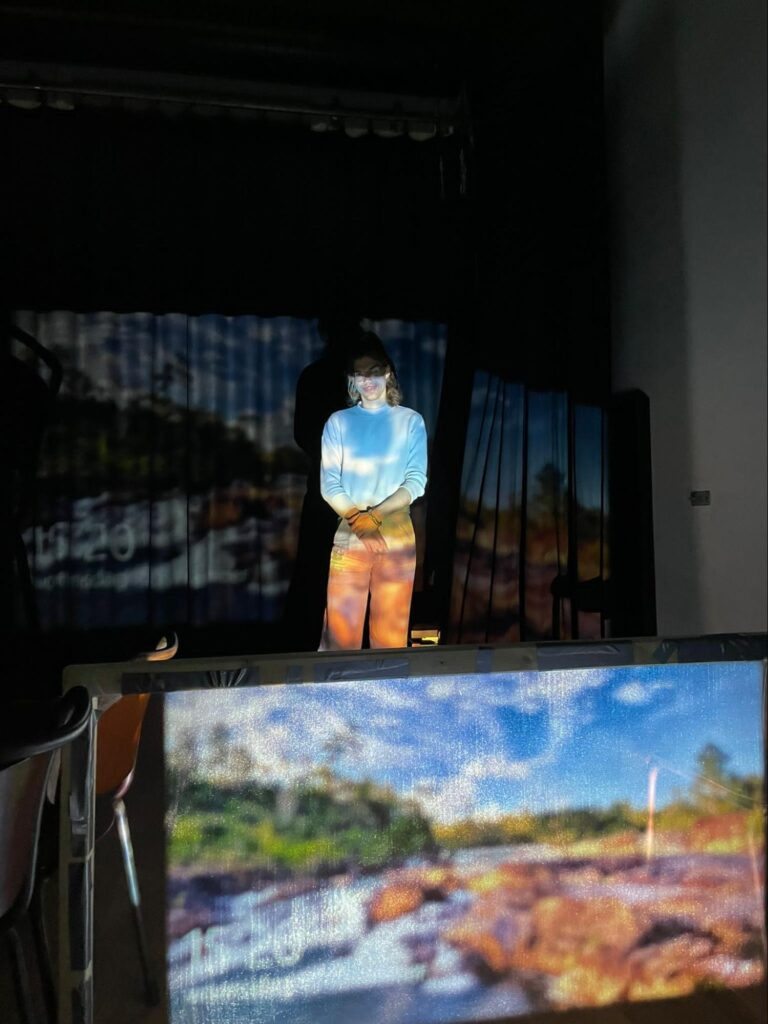

The first two weeks of the program were dedicated to getting to know Theater Utrecht, innovation:Lab and the space that we would be working in. We also wanted to do small projections on a small test version of a screen to do a little material study. In which we experimented with the projection potential of grey tulle. We found that simple grey tulle already caught a good light density and we could proceed with the material quite easily.

We anticipated that while the structural integrity of a small screen would be rather easy, an upscaled holographic screen would introduce more structural and material issues, like wrinkles in the screen, wobbliness in the structure and even potential breaking points in the frame.

We made a small makeshift 16:9 frame out of wood on which we tightened gray tulle fabric. We used an accessible home cinema projector to create the image.

In the last week of November 2023, we gathered enough materials to create a larger version of our test screen. The new screen was 4 meters wide and 2 meters high and built out of wood. We wanted to test the emotional effect of seeing a projected character on eye level and if the size of the character would already evoke a relatability to the figure.

We also started to experiment with Neos VR to see if we could get life size moving characters from the multiplayer online world on the screen.

The gameplay of NeosVR bears similarities to that of VRChat and AltspaceVR. Players interact with each other through virtual 2D and 3D avatars capable of lip sync, eye tracking, blinking, and a complete range of motion. The game may be played with either VR equipment or in a desktop configuration.

We wanted to use Neos VR as a performance tool, to have massive multiplayer access to the performance.

We asked ourselves the question: Is Neos VR stable enough as a development tool to host performances in and does the interface of the system offer us enough possibilities to develop complex interactions with the participant without breaking?

In the first weeks of December 2023, we explored the possibilities of Resonite as a performance tool. We encountered some problems in using Resonite in our setup. We had a lot of game crashing errors in the scenes. Also, we could not reliably export a live orthographic camera feed to OSB or a second screen to project it onto a beamer.

We had to seriously ask ourselves whether we could make a functional build in Resonite to create the performance and whether we would be able to work with this new software before the day of the presentation. We were doubting the stability and flexibility of the systems that we were researching.

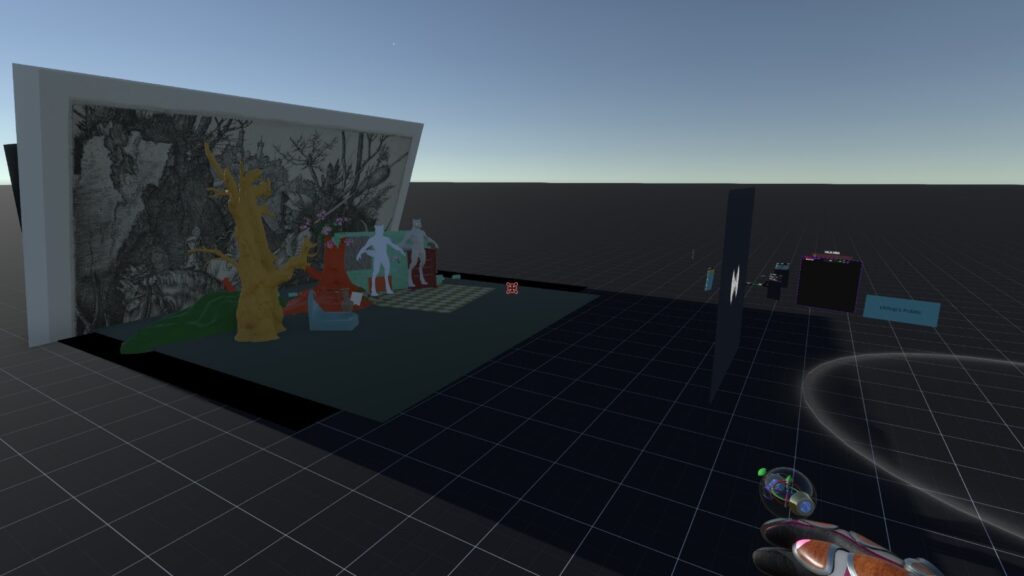

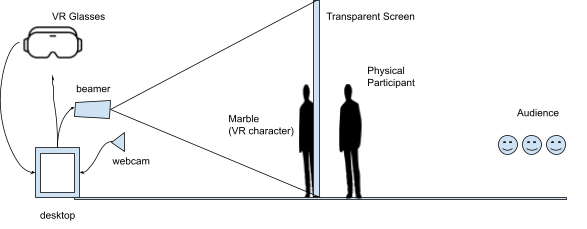

We placed an orthographic virtual camera in 3d engine space in the Resonite space. We wanted to capture what the VR characters were doing in Resonite and project that onto the tulle, to have a live connection between the audience and the participant.

The main decision in the 2nd week of December 2023 was to switch from Resonite to Unity as main engine. We loved the idea of hooking the performance up to a large multiplayer world, but we found the technology to be too precarious for the level of stability we wanted to achieve during the performance. Our team was sufficiently proficient with Unity and we felt confident to proceed making the performance in the new engine.

The question that we asked ourselves were: “Can we create a webcam livestream with low latency connection in unity, where the VR actor can see the audience clearly?” and “Can we create an orthographic camera viewpoint and be able to export the camera feed to a projector?”

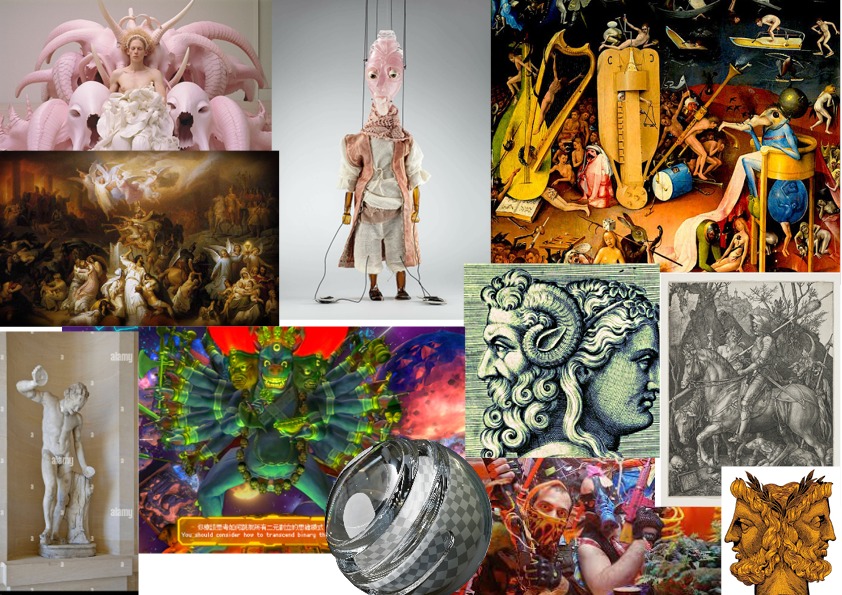

We also experimented with several different moodboards, to establish a first view of a visual universe in which the VR character could exist. We experimented with glass and meat shaders to see what textures would evoke an alienesque and otherworldly subterranean feeling. This would already be a good establishment for the final aesthetic feel.

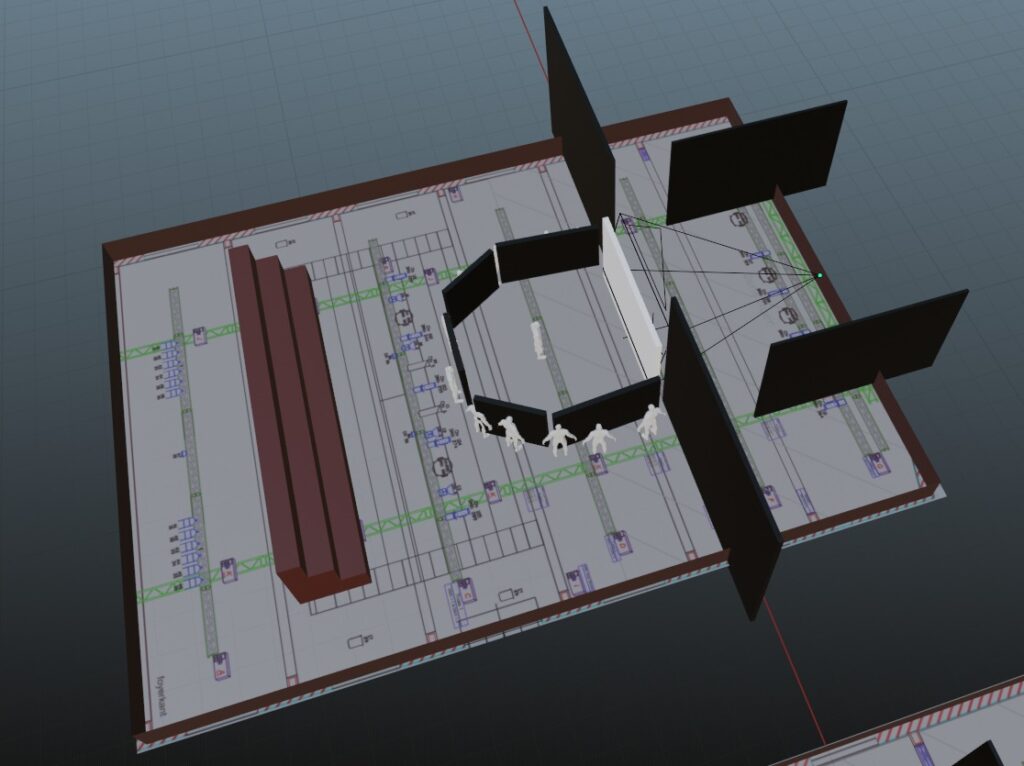

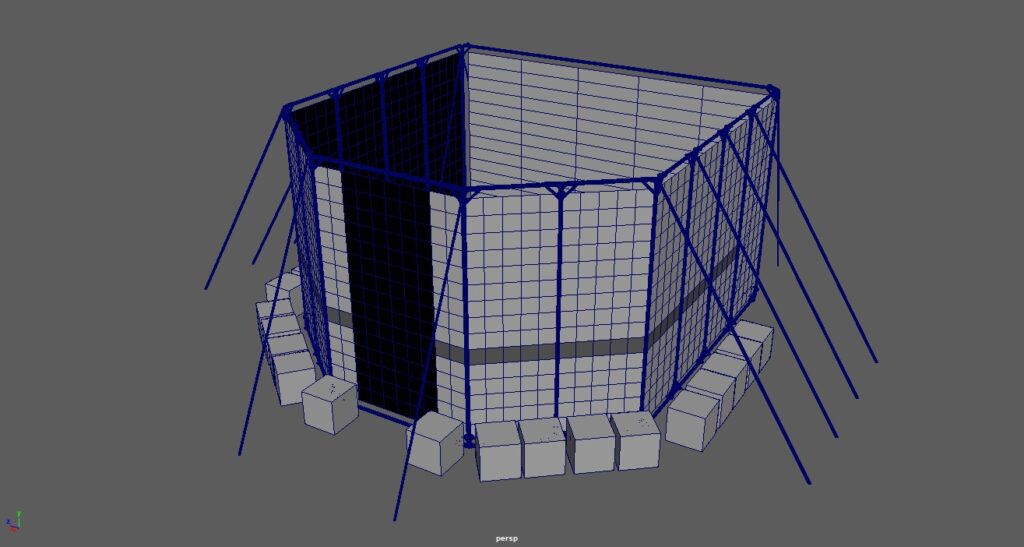

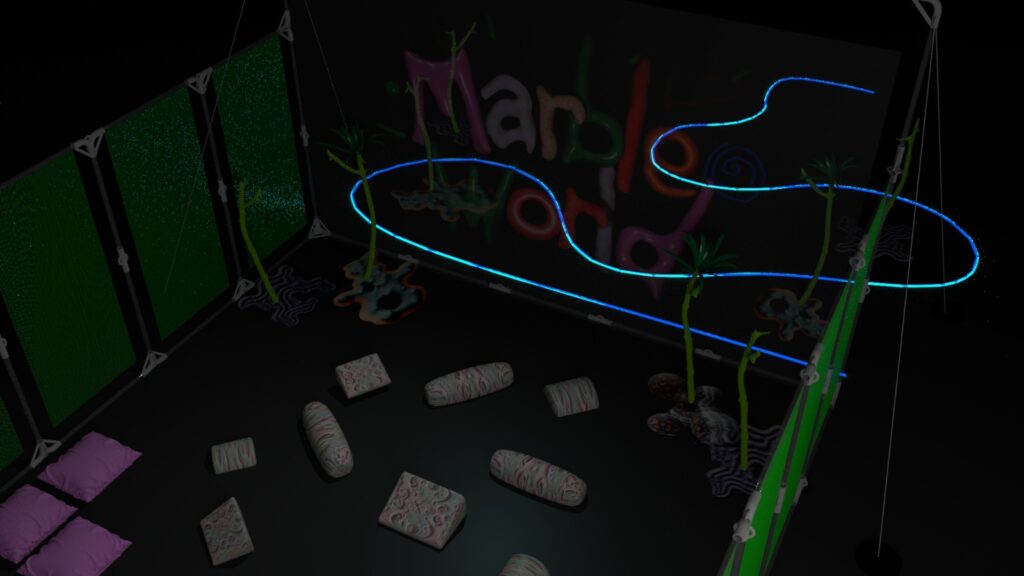

In the last week of December, we focussed on the first parts of the physical installation. We experimented with the first 3d printed material. The main objective was to build a large arena room in which the VR character could intimately meet the participant. The arena would also host the screen and would need to be incredibly stable.

One of the questions was: “Can we make a modular physical structure with can fit in a bag, while also being sturdy enough to be large scale (5 meters deep x 4 meters wide).

“What kinds of materials would both be lightweight and sturdy?”

We made 3d models of connection pieces in 3d software. We 3d printed the first generation of connection pieces and would test their durability. We found out that the first generation of pieces were printed in a way that they had a lot of breaking points. We had to revise the 3d models and the way of printing to create less breaking points in the structure.

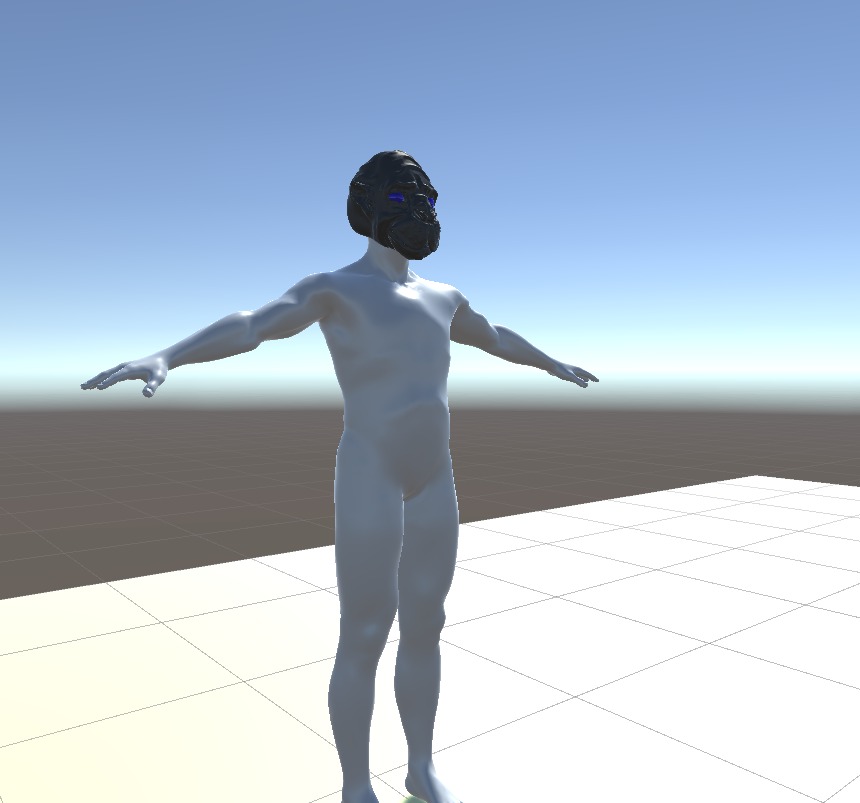

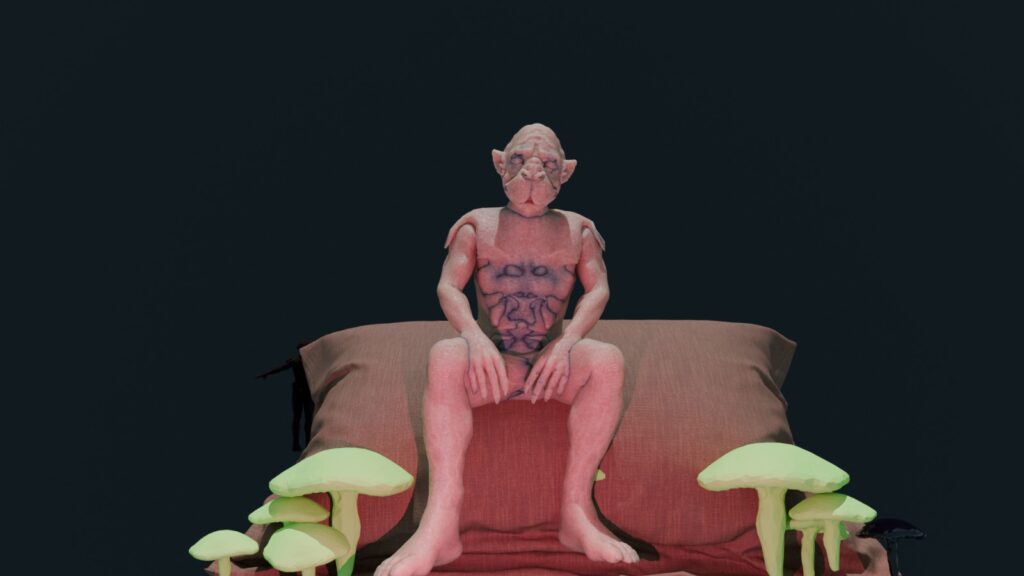

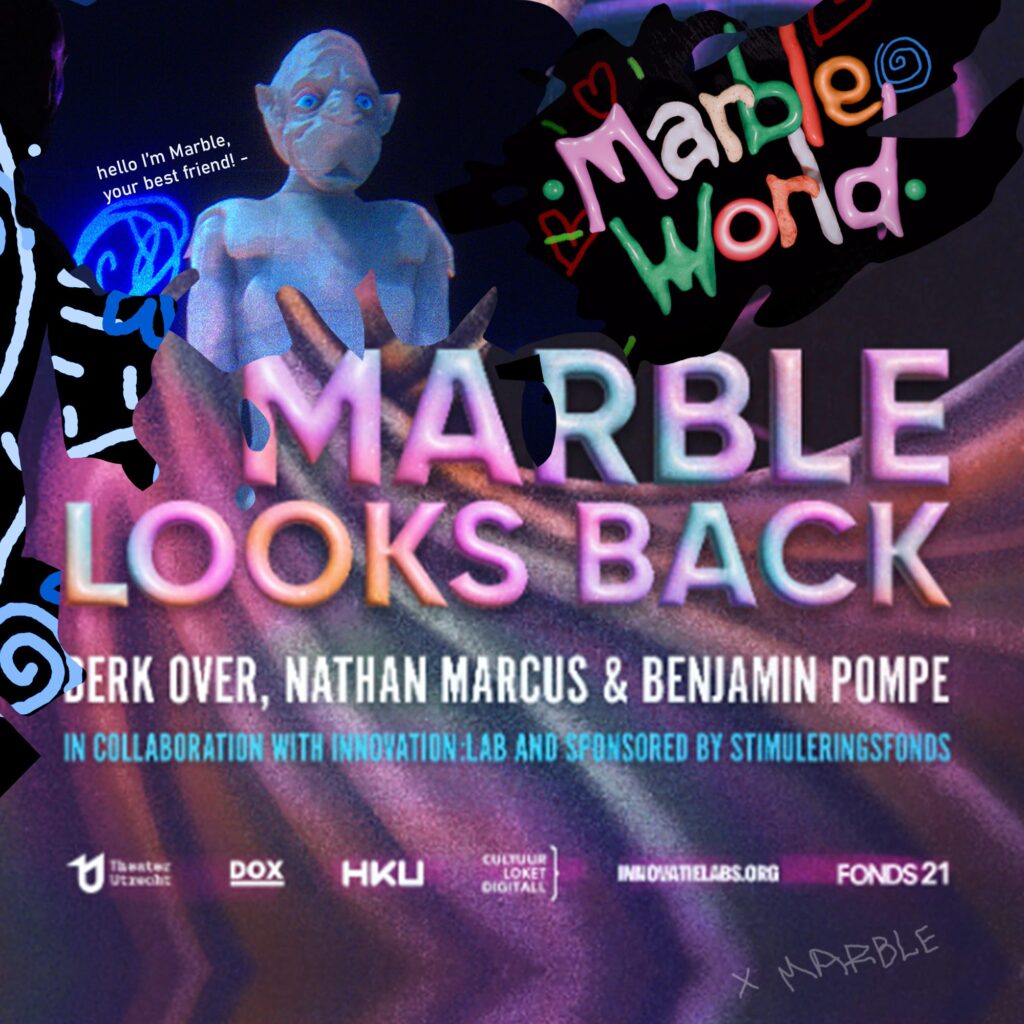

In the second week of Januari 2024, we focussed on creating the final design of Marble, and where we would find the balance between a design that would evoke emotional connection and a design that would promote an estrangement. We drew inspiration from vintage cartoons, like the old lord of rings media and live action puppet shows.

In the development process, we started to immediately work in blender and started adding volumes onto pre-existing models. By doing this, and looking at reference material, the general outline of “Marble” started to take shape. A being that would be in the middle of a walrus, a goblin, and a human.

In the third part of Januari 2024, we focused more attention on the physical screen again. We found out that the large structure was rather wobbly and the new connection pieces would break quite easily under pressure. The angle of 3d printing was still not correct, as the printing angle would layer the models in such a way that the models still had a lot of breakpoints.

The question that we asked was: “What physical safety measures does the frame and screen need to adhere to in order to be able to safely exhibit the project?”

We decided that we would need a screen frame that would need attachment points to the ceiling with steel cables. For this, we needed alternative 3d models with little loops on which the frame could be attached. We also decided that we would need to stabilize the screen with weights on the ground and stabilize it like a tent. We needed to design weights and attachment systems.

In the last week of January, Marble would take its final form. The structure of the face would be final and the only design process left would be to find the color and shape of the eyes.

We developed the skin share more and asked ourselves the question: “Can we find a skin shader that looks realistic and luminous, but would also translate well to projection on tulle?”

The character was made in 3d modeling software and still had to be translated to Unity.

In the last week of Januari, we met with Malou Palmboom a scenographer. We talked with Malou about the audience experience of the performance. The challenge was that we wanted the performance to be accessible for an audience of 20+ people, while also giving the participant a feeling of intimacy and privacy, while they are being watched. We experimented with the idea of putting the audience members behind a translucent screen, to put a visual barrier between the performer and the audience member.

We asked ourselves the question: “How can we recreate an intimate space in a large theatre room?”

We made a lot of 3d visualizations on the floorplan of the theater to have a good idea of how much breathing room the project has space-wise. We found out that actually, because the projector already needs a lot of distance to the screen, that the field of the participant is actually already quite limited.

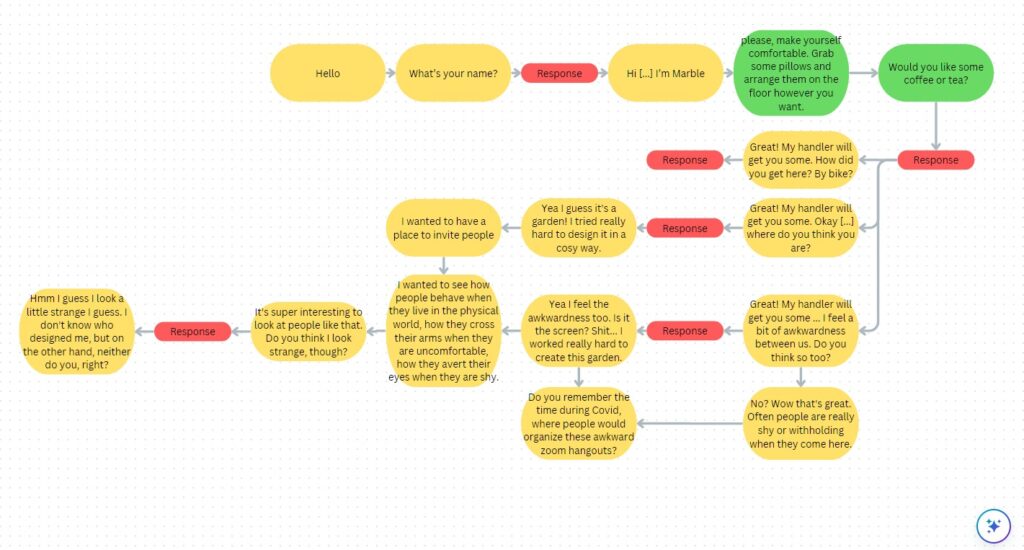

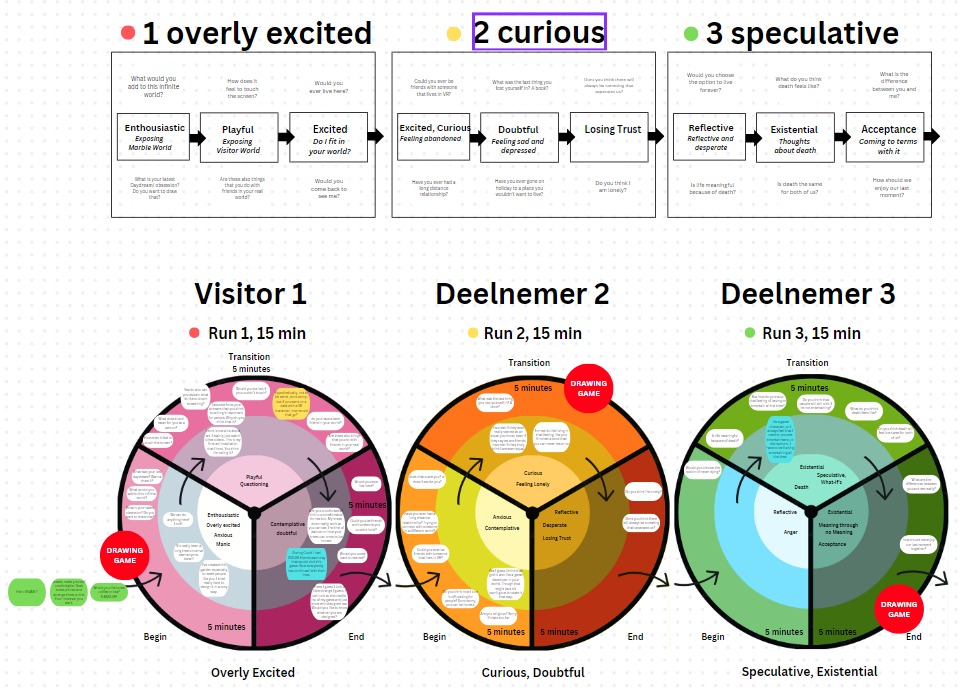

We also worked with Malou on a first conversational structure in the script. Marble Looks Back is an unscripted performance, but has a lot of guiding questions that can be asked in strategic order to guide the conversation between Marble and the performer. We created a narrative branch/flowchart, where the questions are linked to topics.

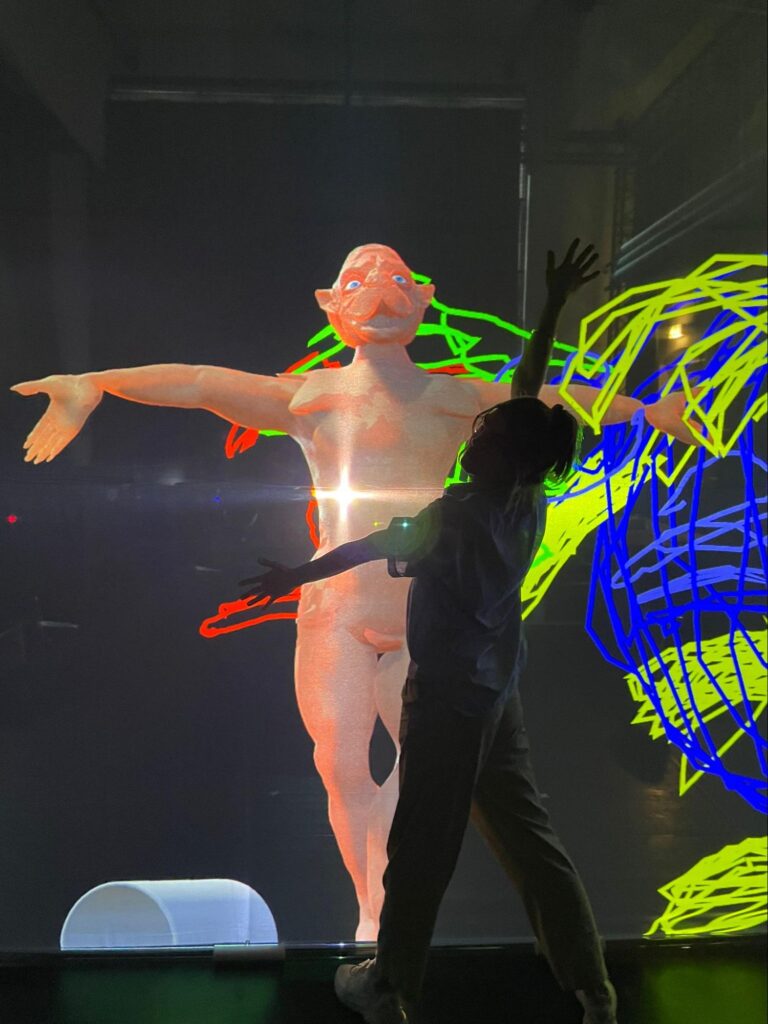

In the second week of February, we focussed hosting a first playtest. We invited two members of Innovation:Lab to interact with Marble. All the elements; Marble’s aesthetic, the objects in the world, the projection screen came together in a first playtest. We dubbed this playtest a success, as we were able to, in the 10 minutes that the participants engaged with Marble and had full conversations with little latency in the technology. The interaction also felt robust and would withstand the playtest rather easily.

Based on the results of the playtest, the participants answered some pre-prepared questions from the team.

“Do you believe that Marble exists as a being?” “Did you emotionally engage with Marble while the interaction was happening?”

Based on the answers we decided that we were well on our way of making “Marble Looks Back” and engaging and interactive performance. The playtesters mentioned that they already felt that they were having a conversation with another being and that they quickly felt very comfortable and safe in answering the questions from Marble.

In the third week of February, we hosted an exchange with the school: “Interactive Performance Design” from HKU. We invited the students, because one of the objectives of the projects was to make an installation that is made with accessible technology and with affordable materials. We performed two sets of Marble Looks Back.

We decided to have two separate playtests. One with a participant without an audience and one with a participant with an audience. We quickly found out that even with an audience present, the participant felt quite at ease and answered and engaged with Marble in a calm and natural manner. We speculated that this is happening because the participant is viewing Marble and actually never sees the audience while participating. We also discovered that even if people have introverted tendencies, that they felt invited and brave enough to intimately open up to the questions of Marble, which surprised us.

After the playtests, we showed the students of IPD what the “cockpit” of Marble looked like. We showed the students how we designed for interactive dialogue. Based on the feedback that we received, we surmised that we indeed were working in a way which was feasible for new makers to connect to, technology and material threshold wise.

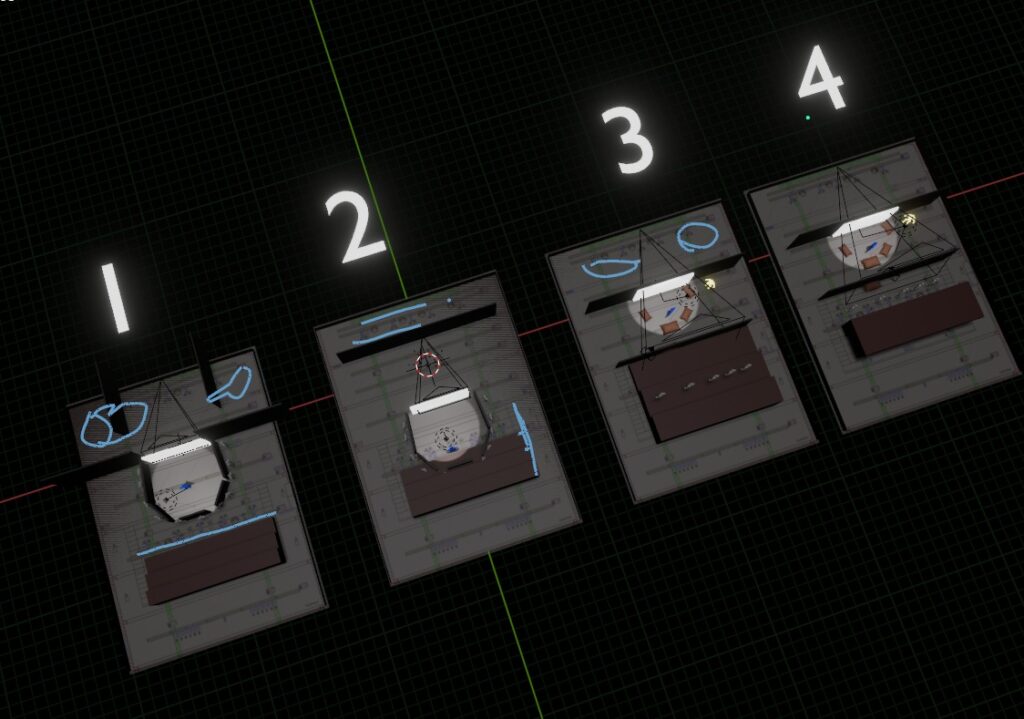

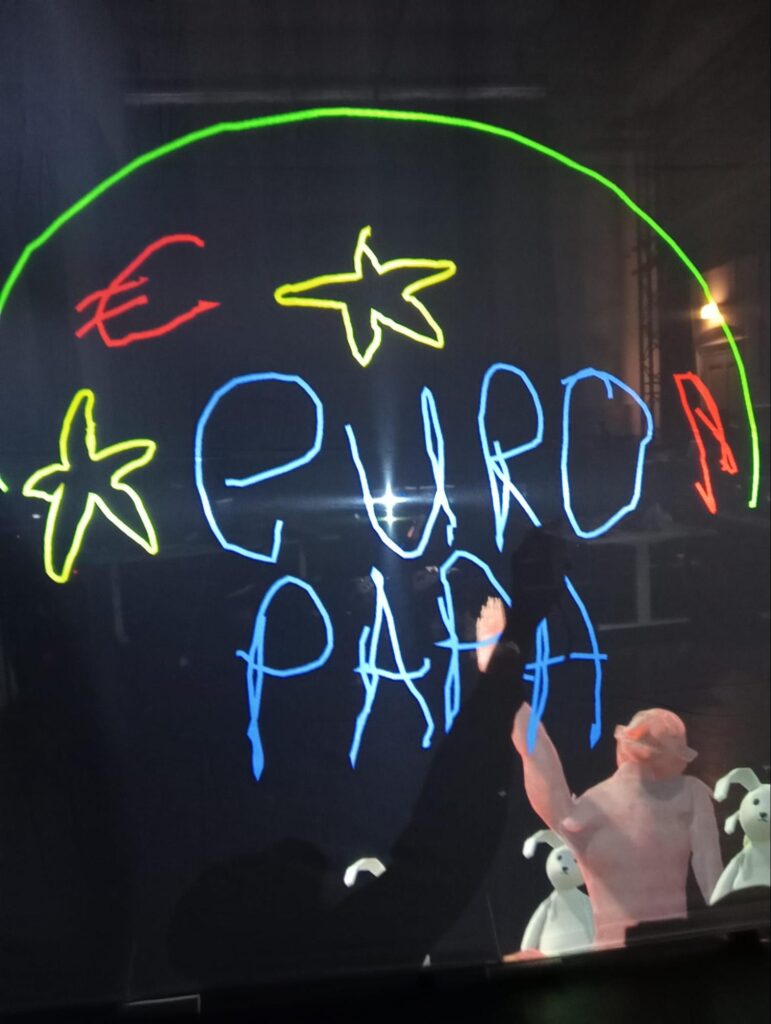

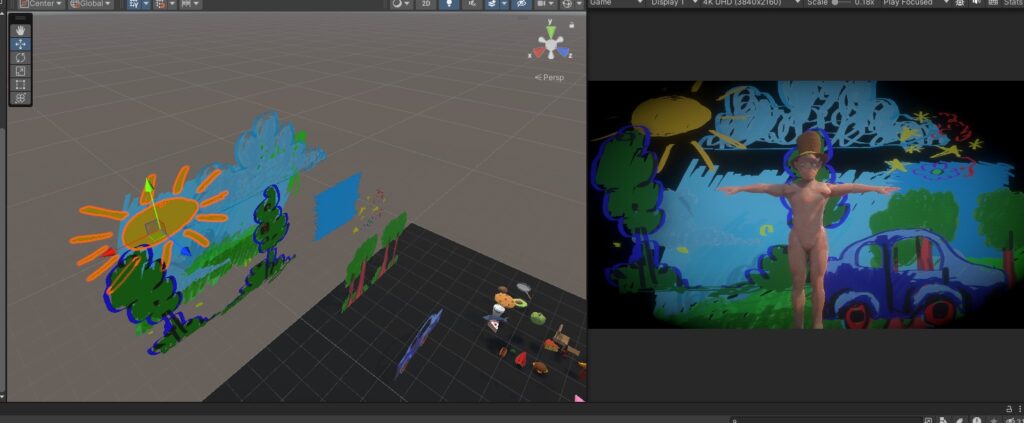

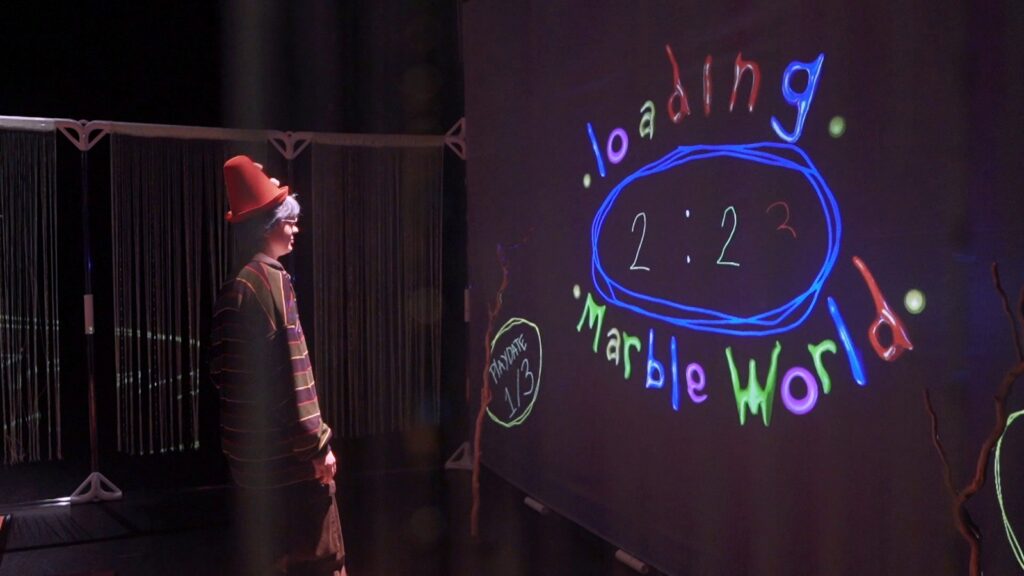

In the last week of February, we found our defining environmental art style and established the drawing game. In this drawing game, the participants uses the flashlight of the phone and draws a drawing together with Marble. We established this by having the webcam camera register the light and to have the VR actor in Unity match up the hand of the VR character (Marble) with the participant on the screen, making it look like they are drawing together. Based on the playtests, we found out that this drawing game works quite intuitively, although there is a lack of detail that could be interpreted as either distracting or charming, based on the participant.

We asked ourselves the question: “Is there a complete illusion that the participant is drawing a picture together with Marble? Are the sensory reactions/latency well-proportioned? Is there a true sense of co-creation?

We made the drawing game in Unity. Marble actually draws the lines in 3d space, but because it is recorded by an orthographic camera, it looks like Marble is drawing on a 2D plane together with the participant.

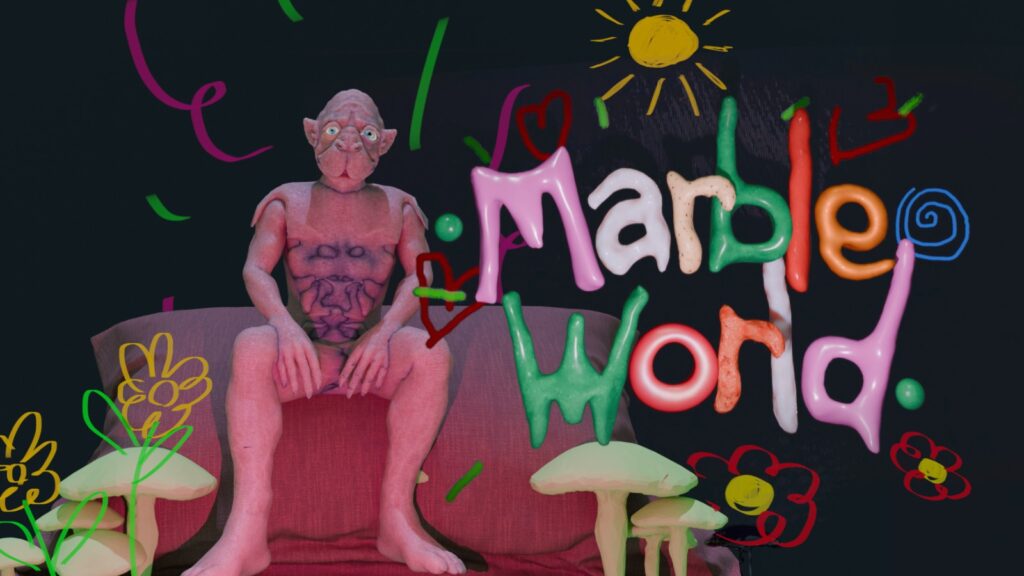

In the first week of march, we started working on the intro video and final aesthetic material of the art style. The into video was meant to guide people into the performance and to expose the universe that Marble finds themselves in. The video would mimic a classic 90’s cartoon artstyle with 3D graphics and custom music.

A custom font was created for the project and the art direction of the intro video was continued into the final aesthetics of the installation. We made 3D renders to communicate the final vision and to check whether all the visual material was coming together in a satisfying way.

We asked ourselves the question: “How can we let the universe of Marble from the intro video and the 3D world seamlessly blend over into the physical world?” We wanted to create an alienesque children’s room, where we had a lot of pillows for people to lounge on, but also kept an overstimulating and unsettling feel of the live action 90’s children’s cartoons.

During the whole of March, we worked on finishing the complete set of 3d printing of all the connection pieces of the structure. The focus would still remain on that all the pieces needed to be portable in a standard sized car. This would entail over 60 individual 3d printed pieces. After this was done, we built the structure in a smaller form in our garden. Here we tested with the cables and the weights to see how much stabilization the structure needed weight wise.

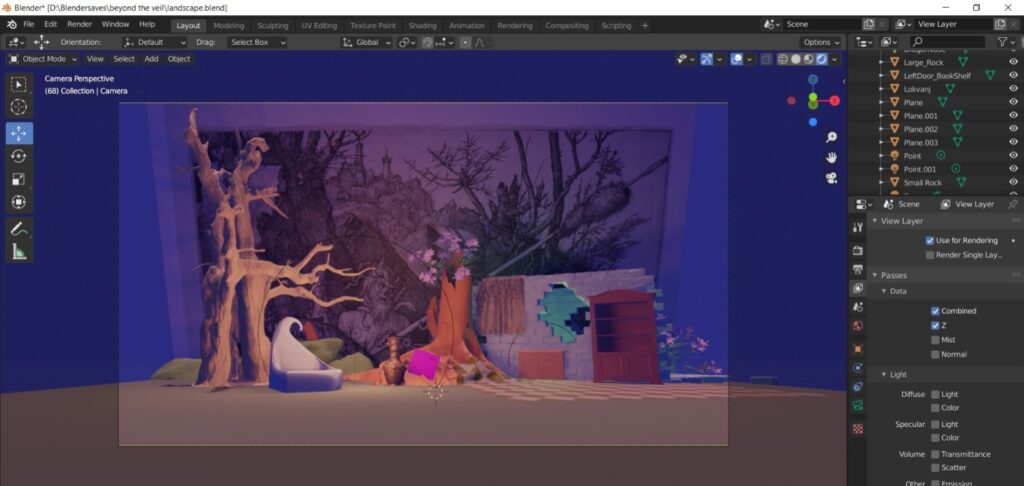

We also completed the background elements for the 3d engine in which Marble would play. This would set the interactables for the performance and would have to give the audience the impression that “Marble World” has “Limitless” possibilities.

In the weeks before the performance, we sat down again with Malou Palmboom and dived deeper into the psychology of the main character.

In the performance “Marble Looks Back”, 3 people each have 15 minutes to talk to Marble and play games with them. Marble, however, will ask more serious questions as time passes in the performance and Marble, together with the participant, will speculate on whether there is even a real connection at all. Marble with grow more and more existentially depressed after finding out how much distance exists between Marble and the participant.

On the 20th of April, we prepared the PaardenKathedraal to show the premiere of Marble looks Back. We built the installation, and did the audio setup and the software setup.

Some issues came up during the audio connection process of Marble’s voice. But eventually, it was all resolved.

During the two screenings with Sasa and Derk performing respectively, we saw a big difference in the energy of both performances, influenced of course by each individual performer. But also the audience had a big effect on the outcome of both performances. The second run had a lot more general crowd engagement. People were not shy to comment, laugh, and react to the performance. Which we believe affected the performance itself. Gaining energy and feedback directly from the audience made Marble possibly more immersive in the second run.

We asked ourselves the questions: “How was the performance received by the audience, the participant and the performer?”

“Is the installation and the set up going to work in the Paardenkathedraal?” “Is connection between the VR headset and the hardware downstairs quick enough to function?”

In conclusion, we reflect extremely positively upon this experience. Stimuleringsfonds Digitale Cultuur and Innovation:Lab granted us a great amount freedom and time to develop a project that is authentic and dear to our hearts. We aimed to produce a project that could be shared with makers who want a low threshold entrance into XR production.

We are excited to see how the research and project “Marble Looks Back” will develop in the future!